Image credit: Jose-Luis Olivares/MIT

MIT engineers have formulated a new algorithm that can help increase the speeds of complex systems such as drones and other autonomous robots.

Image credit: Jose-Luis Olivares/MIT

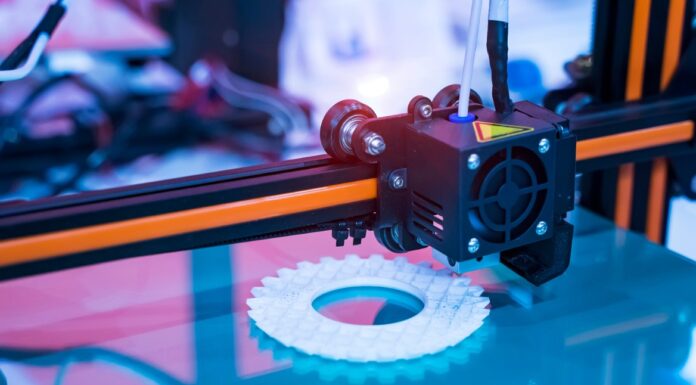

Today’s drones are limited to flying at about 30 miles per hour, as flying beyond this speed limit would affect its ability to safely navigate obstacles. This is because a drone’s camera can only process images so fast, frame by individual frame.

However, engineers at MIT have come close to addressing this limitation. The MIT team created a new algorithm that fine tunes a Dynamic Vision Sensor (DVS) camera, enabling it to reduce a scene to its most essential visual elements.

Developed by researchers in Zurich, the DVS camera is the first commercially available “neuromorphic” sensor — a class of sensors that is modelled after the vision systems in animals and humans.

Lead author Prince Singh, a graduate student in MIT’s Department of Aeronautics and Astronautics, said the sensor requires no image processing and is designed to enable, among other applications, high-speed autonomous flight.

“There is a new family of vision sensors that has the capacity to bring high-speed autonomous flight to reality, but researchers have not developed algorithms that are suitable to process the output data,” he explained.

“We present a first approach for making sense of the DVS’ ambiguous data, by reformulating the inherently noisy system into an amenable form.”

A drone with a DVS camera would not “see” a typical video feed, but a grainy depiction of pixels that switch between two colours, depending on whether that point in space has brightened or darkened at any given moment.

Singh said the team’s algorithm can now be used to set a DVS camera’s sensitivity to detect the most essential changes in brightness for any given linear system, while excluding extraneous signals.

“We want to break that speed limit of 20 to 30 miles per hour, and go faster without colliding,” he said.

“The next step may be to combine DVS with a regular camera, which can tell you, based on the DVS rendering, that an object is a couch versus a car, in real time.”